更新

很不幸, CNCF明星项目Kubesphere断供, 可以说是闭源了

可以参考备用资源:

https://docs.kubesphere-carryon.top/zh

https://github.com/whenegghitsrock/kubesphere-carryon

一. 环境准备

0. 前置工作:

四台服务器

操作系统: Centos7.6

网络: 四台服务器之间互通, 本次示例中为虚拟机桥接网络

服务器IP以及用途:

修改静态IP(在所有节点都执行)

vi /etc/sysconfig/network-scripts/ifcfg-ens33 # 内容修改如下(根据情况修改每个节点的IP地址): BOOTPROTO=none NAME=ens33 DEVICE=ens33 ONBOOT=yes IPADDR=192.168.19.121 NETMASK=255.255.255.0 GATEWAY=192.168.19.2 DNS=114.114.114.114更换CentOS官方yum源(可选)(在所有节点都执行)

重置DNS服务器为谷歌公共DNS

cat > /etc/resolv.conf << EOF nameserver 8.8.8.8 nameserver 8.8.4.4 EOF备份当前yum源

cp -ar /etc/yum.repos.d /etc/yum.repos.d.bak清除原有yum源文件

rm -f /etc/yum.repos.d/*创建新的yum源配置文件, 示例为腾讯云yum源

vim /etc/yum.repos.d/CentOS-Base.repo # 内容如下: [os] name=Qcloud centos os - $basearch baseurl=http://mirrors.cloud.tencent.com/centos/$releasever/os/$basearch/ enabled=1 gpgcheck=1 gpgkey=http://mirrors.cloud.tencent.com/centos/RPM-GPG-KEY-CentOS-7 [updates] name=Qcloud centos updates - $basearch baseurl=http://mirrors.cloud.tencent.com/centos/$releasever/updates/$basearch/ enabled=1 gpgcheck=1 gpgkey=http://mirrors.cloud.tencent.com/centos/RPM-GPG-KEY-CentOS-7 [centosplus] name=Qcloud centosplus - $basearch baseurl=http://mirrors.cloud.tencent.com/centos/$releasever/centosplus/$basearch/ enabled=0 gpgcheck=1 gpgkey=http://mirrors.cloud.tencent.com/centos/RPM-GPG-KEY-CentOS-7 [cr] name=Qcloud centos cr - $basearch baseurl=http://mirrors.cloud.tencent.com/centos/$releasever/cr/$basearch/ enabled=0 gpgcheck=1 gpgkey=http://mirrors.cloud.tencent.com/centos/RPM-GPG-KEY-CentOS-7 [extras] name=Qcloud centos extras - $basearch baseurl=http://mirrors.cloud.tencent.com/centos/$releasever/extras/$basearch/ enabled=1 gpgcheck=1 gpgkey=http://mirrors.cloud.tencent.com/centos/RPM-GPG-KEY-CentOS-7 [fasttrack] name=Qcloud centos fasttrack - $basearch baseurl=http://mirrors.cloud.tencent.com/centos/$releasever/fasttrack/$basearch/ enabled=0 gpgcheck=1 gpgkey=http://mirrors.cloud.tencent.com/centos/RPM-GPG-KEY-CentOS-7更新yum缓存

sudo yum clean all sudo yum makecache

安装工具包(在所有节点都执行)

yum -y install vim wget unzip net-tools.x86_64 bind-utils conntrack socat

1. 关闭防火墙

# 在所有节点都执行:

systemctl stop firewalld

systemctl disable firewalld2. 禁用SELinux, 允许Docker访问主机文件系统

# 在所有节点都执行:

# 临时禁用

setenforce 0

# 永久禁用

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config3. 禁用交换分区

# 在所有节点都执行:

# 临时禁用

swapoff -a

# 永久禁用

sed -ri 's/.*swap.*/#&/' /etc/fstab4. 设置主机名

# 在k8s-node-1节点执行:

hostnamectl set-hostname k8s-node-1

# 在k8s-node-2节点执行:

hostnamectl set-hostname k8s-node-2

# 在k8s-node-3节点执行:

hostnamectl set-hostname k8s-node-3

# 在k8s-nfs节点执行:

hostnamectl set-hostname k8s-nfs

# 在所有节点都执行:

cat >> /etc/hosts << EOF

192.168.19.121 k8s-node-1

192.168.19.122 k8s-node-2

192.168.19.123 k8s-node-3

192.168.19.124 k8s-nfs

EOF5. 将桥接IPV4和IPV6流量转到iptables链路

# 在所有节点都执行:

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF6. 应用配置

# 在所有节点都执行:

sysctl --system7. 时间同步(可选)

# 在所有节点都执行:

yum install ntpdate -y

ntpdate time.windows.com二. 安装kubernetes

0. 下载KubeKey

# 在k8s-node-1节点执行:

[root@k8s-node-1 ~]# cd /opt/

[root@k8s-node-1 opt]# mkdir kubekey

[root@k8s-node-1 opt]# ls

kubekey

[root@k8s-node-1 opt]# cd kubekey/

[root@k8s-node-1 kubekey]# export KKZONE=cn

[root@k8s-node-1 kubekey]# curl -sfL https://get-kk.kubesphere.io | sh -

Downloading kubekey v3.1.7 from https://kubernetes.pek3b.qingstor.com/kubekey/releases/download/v3.1.7/kubekey-v3.1.7-linux-amd64.tar.gz ...

Kubekey v3.1.7 Download Complete!

[root@k8s-node-1 kubekey]# ls -lhra

总用量 114M

-rw-r--r--. 1 root root 36M 11月 13 16:14 kubekey-v3.1.7-linux-amd64.tar.gz

-rwxr-xr-x. 1 root root 79M 10月 30 17:42 kk

drwxr-xr-x. 3 root root 21 11月 13 16:12 ..

drwxr-xr-x. 2 root root 57 11月 13 16:14 .

# 查看kubekey支持的K8S版本

[root@k8s-node-1 kubekey]# ./kk version --show-supported-k8s

v1.19.0

v1.19.8

v1.19.9

v1.19.15

v1.20.4

v1.20.6

...

...

v1.30.5

v1.30.6

v1.31.0

v1.31.1

v1.31.2

[root@k8s-node-1 kubekey]#1. 创建 Kubernetes 集群部署配置(示例中K8S版本选择1.23.6)

# 在k8s-node-1节点执行

[root@k8s-node-1 kubekey]# pwd

/opt/kubekey

[root@k8s-node-1 kubekey]# ls

kk kubekey-v3.1.7-linux-amd64.tar.gz

[root@k8s-node-1 kubekey]# ./kk create config -f ksp-k8s-v1236.yaml --with-kubernetes v1.23.6

Generate KubeKey config file successfully

[root@k8s-node-1 kubekey]# ls

kk ksp-k8s-v1236.yaml kubekey-v3.1.7-linux-amd64.tar.gz

[root@k8s-node-1 kubekey]#2. 修改集群部署配置文件

# 在k8s-node-1节点执行

[root@k8s-node-1 kubekey]# pwd

/opt/kubekey

[root@k8s-node-1 kubekey]# ls

kk ksp-k8s-v1236.yaml kubekey-v3.1.7-linux-amd64.tar.gz

[root@k8s-node-1 kubekey]# vi ksp-k8s-v1236.yaml# 修改后的内容如下

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- { name: k8s-node-1, address: 192.168.19.121, internalAddress: 192.168.19.121, user: root, password: "root" }

- { name: k8s-node-2, address: 192.168.19.122, internalAddress: 192.168.19.122, user: root, password: "root" }

- { name: k8s-node-3, address: 192.168.19.123, internalAddress: 192.168.19.123, user: root, password: "root" }

roleGroups:

etcd:

- k8s-node-1

- k8s-node-2

- k8s-node-3

control-plane:

- k8s-node-1

- k8s-node-2

- k8s-node-3

worker:

- k8s-node-1

- k8s-node-2

- k8s-node-3

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.23.6

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

storage:

openebs:

basePath: /data/openebs/local

registry:

privateRegistry: "registry.cn-beijing.aliyuncs.com"

namespaceOverride: "kubesphereio"

registryMirrors: [ ]

insecureRegistries: [ ]

addons: [ ]spec.hosts参数用于设置各节点服务器:

spec.roleGroups参数用于设置各节点服务器的角色:

spec.controlPlaneEndpoint参数用于设置高可用性信息:

其他配置参数:

3. 部署K8S集群

# 在k8s-node-1节点执行

[root@k8s-node-1 kubekey]# pwd

/opt/kubekey

[root@k8s-node-1 kubekey]# ls

kk ksp-k8s-v1236.yaml kubekey-v3.1.7-linux-amd64.tar.gz

[root@k8s-node-1 kubekey]# export KKZONE=cn

[root@k8s-node-1 kubekey]# ./kk create cluster -f ksp-k8s-v1236.yaml命令执行后, Kubekey会检查部署K8s的依赖及其他详细要求, 通过检查后,系统将提示是否确认安装. 输入 yes 并按回车键继续部署

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | |_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

16:46:24 CST [GreetingsModule] Greetings

16:46:25 CST message: [k8s-node-3]

Greetings, KubeKey!

16:46:25 CST message: [k8s-node-1]

Greetings, KubeKey!

16:46:26 CST message: [k8s-node-2]

Greetings, KubeKey!

16:46:26 CST success: [k8s-node-3]

16:46:26 CST success: [k8s-node-1]

16:46:26 CST success: [k8s-node-2]

16:46:26 CST [NodePreCheckModule] A pre-check on nodes

16:46:26 CST success: [k8s-node-1]

16:46:26 CST success: [k8s-node-3]

16:46:26 CST success: [k8s-node-2]

16:46:26 CST [ConfirmModule] Display confirmation form

+------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| k8s-node-1 | y | y | y | y | y | y | | y | y | | y | | | | CST 16:46:26 |

| k8s-node-2 | y | y | y | y | y | y | | y | y | | y | | | | CST 16:38:04 |

| k8s-node-3 | y | y | y | y | y | y | | y | y | | y | | | | CST 16:38:09 |

+------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Install k8s with specify version: v1.23.6

Continue this installation? [yes/no]:部署完成后, 终端展示信息如下:

...

13:56:58 CST [AutoRenewCertsModule] Enable k8s certs renew service

13:56:58 CST success: [k8s-node-1]

13:56:58 CST success: [k8s-node-2]

13:56:58 CST success: [k8s-node-3]

13:56:58 CST [SaveKubeConfigModule] Save kube config as a configmap

13:56:58 CST success: [LocalHost]

13:56:58 CST [AddonsModule] Install addons

13:56:58 CST message: [LocalHost]

[0/0] enabled addons

13:56:58 CST success: [LocalHost]

13:56:58 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.

Please check the result using the command:

kubectl get pod -A

[root@k8s-node-1 kubekey]# 4. 验证kubernetes集群状态

确认3个节点都就绪, 且所有Pod都是运行状态

# 在k8s-node-1节点执行

[root@k8s-node-1 kubekey]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-node-1 Ready control-plane,master,worker 14m v1.23.6 192.168.19.121 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://24.0.9

k8s-node-2 Ready control-plane,master,worker 14m v1.23.6 192.168.19.122 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://24.0.9

k8s-node-3 Ready control-plane,master,worker 14m v1.23.6 192.168.19.123 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://24.0.9

[root@k8s-node-1 kubekey]#

[root@k8s-node-1 kubekey]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-6f996c8485-ct2vp 1/1 Running 0 15m 10.233.113.3 k8s-node-2 <none> <none>

kube-system calico-node-gf72r 1/1 Running 0 15m 192.168.19.121 k8s-node-1 <none> <none>

kube-system calico-node-hf7zj 1/1 Running 0 15m 192.168.19.123 k8s-node-3 <none> <none>

kube-system calico-node-q86jq 1/1 Running 0 15m 192.168.19.122 k8s-node-2 <none> <none>

kube-system coredns-d8b4f5447-jdgql 1/1 Running 0 15m 10.233.113.2 k8s-node-2 <none> <none>

kube-system coredns-d8b4f5447-x99fl 1/1 Running 0 15m 10.233.113.1 k8s-node-2 <none> <none>

kube-system kube-apiserver-k8s-node-1 1/1 Running 0 16m 192.168.19.121 k8s-node-1 <none> <none>

kube-system kube-apiserver-k8s-node-2 1/1 Running 0 15m 192.168.19.122 k8s-node-2 <none> <none>

kube-system kube-apiserver-k8s-node-3 1/1 Running 0 15m 192.168.19.123 k8s-node-3 <none> <none>

kube-system kube-controller-manager-k8s-node-1 1/1 Running 0 16m 192.168.19.121 k8s-node-1 <none> <none>

kube-system kube-controller-manager-k8s-node-2 1/1 Running 0 15m 192.168.19.122 k8s-node-2 <none> <none>

kube-system kube-controller-manager-k8s-node-3 1/1 Running 0 15m 192.168.19.123 k8s-node-3 <none> <none>

kube-system kube-proxy-bpvgx 1/1 Running 0 15m 192.168.19.123 k8s-node-3 <none> <none>

kube-system kube-proxy-dkk4f 1/1 Running 0 15m 192.168.19.122 k8s-node-2 <none> <none>

kube-system kube-proxy-glq2s 1/1 Running 0 15m 192.168.19.121 k8s-node-1 <none> <none>

kube-system kube-scheduler-k8s-node-1 1/1 Running 0 16m 192.168.19.121 k8s-node-1 <none> <none>

kube-system kube-scheduler-k8s-node-2 1/1 Running 0 15m 192.168.19.122 k8s-node-2 <none> <none>

kube-system kube-scheduler-k8s-node-3 1/1 Running 0 15m 192.168.19.123 k8s-node-3 <none> <none>

kube-system nodelocaldns-bmctw 1/1 Running 0 15m 192.168.19.122 k8s-node-2 <none> <none>

kube-system nodelocaldns-spwfn 1/1 Running 0 15m 192.168.19.123 k8s-node-3 <none> <none>

kube-system nodelocaldns-xkbk8 1/1 Running 0 15m 192.168.19.121 k8s-node-1 <none> <none>

[root@k8s-node-1 kubekey]#

[root@k8s-node-1 kubekey]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers v3.27.4 6b1e38763f40 4 months ago 75.8MB

registry.cn-beijing.aliyuncs.com/kubesphereio/cni v3.27.4 dc6f84c32585 4 months ago 196MB

registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol v3.27.4 72bfa61e35b3 4 months ago 15.5MB

registry.cn-beijing.aliyuncs.com/kubesphereio/node v3.27.4 3dd4390f2a85 4 months ago 339MB

registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy 2.9.6-alpine 52687313354f 8 months ago 25.8MB

registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache 1.22.20 ff71cd4ea5ae 21 months ago 67.8MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver v1.23.6 8fa62c12256d 2 years ago 135MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy v1.23.6 4c0375452406 2 years ago 112MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager v1.23.6 df7b72818ad2 2 years ago 125MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler v1.23.6 595f327f224a 2 years ago 53.5MB

registry.cn-beijing.aliyuncs.com/kubesphereio/coredns 1.8.6 a4ca41631cc7 3 years ago 46.8MB

registry.cn-beijing.aliyuncs.com/kubesphereio/pause 3.6 6270bb605e12 3 years ago 683kB

[root@k8s-node-1 kubekey]#5. 配置国内镜像源

# 在k8s-node-1, k8s-node-2, k8s-node-3三个节点都执行, 配置完成后重启三台服务器

vi /etc/docker/daemon.json

# 添加一对配置如下:

"registry-mirrors": [

"https://5tqw56kt.mirror.aliyuncs.com",

"https://docker.hpcloud.cloud",

"https://docker.m.daocloud.io",

"https://docker.1panel.live",

"http://mirrors.ustc.edu.cn",

"https://docker.chenby.cn",

"https://docker.ckyl.me",

"http://mirror.azure.cn",

"https://hub.rat.dev"

]三. 对接NFS网络文件系统

0. 安装NFS客户端

# 在所有节点执行

[root@k8s-node-1 opt]# yum install nfs-utils

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

extras | 2.9 kB 00:00:00

os | 3.6 kB 00:00:00

updates | 2.9 kB 00:00:00

正在解决依赖关系

--> 正在检查事务

...

...

已安装:

nfs-utils.x86_64 1:1.3.0-0.68.el7.2

作为依赖被安装:

gssproxy.x86_64 0:0.7.0-30.el7_9 keyutils.x86_64 0:1.5.8-3.el7 libbasicobjects.x86_64 0:0.1.1-32.el7

libcollection.x86_64 0:0.7.0-32.el7 libevent.x86_64 0:2.0.21-4.el7 libini_config.x86_64 0:1.3.1-32.el7

libnfsidmap.x86_64 0:0.25-19.el7 libpath_utils.x86_64 0:0.2.1-32.el7 libref_array.x86_64 0:0.1.5-32.el7

libtirpc.x86_64 0:0.2.4-0.16.el7 libverto-libevent.x86_64 0:0.2.5-4.el7 quota.x86_64 1:4.01-19.el7

quota-nls.noarch 1:4.01-19.el7 rpcbind.x86_64 0:0.2.0-49.el7 tcp_wrappers.x86_64 0:7.6-77.el7

完毕!

[root@k8s-node-1 opt]#1. 配置NFS服务器

配置NFS Server守护进程

# 在k8s-nfs节点执行:

[root@k8s-nfs nfs]# systemctl enable nfs-server

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

[root@k8s-nfs nfs]#

[root@k8s-nfs nfs]# systemctl start nfs-server

[root@k8s-nfs nfs]# systemctl status nfs-server

● nfs-server.service - NFS server and services

Loaded: loaded (/usr/lib/systemd/system/nfs-server.service; enabled; vendor preset: disabled)

Active: active (exited) since 二 2024-10-08 10:44:38 CST; 3s ago

Process: 63113 ExecStartPost=/bin/sh -c if systemctl -q is-active gssproxy; then systemctl reload gssproxy ; fi (code=exited, status=0/SUCCESS)

Process: 63096 ExecStart=/usr/sbin/rpc.nfsd $RPCNFSDARGS (code=exited, status=0/SUCCESS)

Process: 63094 ExecStartPre=/usr/sbin/exportfs -r (code=exited, status=0/SUCCESS)

Main PID: 63096 (code=exited, status=0/SUCCESS)

Tasks: 0

Memory: 0B

CGroup: /system.slice/nfs-server.service

10月 08 10:44:38 k8s-master systemd[1]: Starting NFS server and services...

10月 08 10:44:38 k8s-master systemd[1]: Started NFS server and services.

[root@k8s-nfs nfs]#

[root@k8s-nfs nfs]# cat /proc/fs/nfsd/versions

-2 +3 +4 +4.1 +4.2

[root@k8s-nfs nfs]#配置NFS共享策略

# 在k8s-nfs节点执行:

[root@k8s-nfs nfs]# pwd

/opt/nfs

[root@k8s-nfs nfs]# ls -lhra

总用量 0

drwxr-xr-x. 2 root root 6 9月 30 01:15 rw

drwxr-xr-x. 3 root root 17 10月 8 18:49 ..

drwxr-xr-x. 4 root root 26 10月 8 18:49 .

[root@k8s-nfs nfs]# vi /etc/exports

# 编辑/etc/exports文件, 添加内容如下(其中rw设置为可读写, ro设置为只读, 此处选择rw):

/opt/nfs/rw 192.168.19.0/24(rw,sync,no_subtree_check,no_root_squash)重载NFS Server服务

# 在k8s-nfs节点执行:

[root@k8s-nfs nfs]# exportfs -f

[root@k8s-nfs nfs]# systemctl reload nfs-server

[root@k8s-nfs nfs]#

[root@k8s-nfs nfs]# systemctl status nfs-server

● nfs-server.service - NFS server and services

Loaded: loaded (/usr/lib/systemd/system/nfs-server.service; enabled; vendor preset: disabled)

Drop-In: /run/systemd/generator/nfs-server.service.d

└─order-with-mounts.conf

Active: active (exited) since 二 2024-10-08 18:55:03 CST; 6min ago

Process: 15147 ExecReload=/usr/sbin/exportfs -r (code=exited, status=0/SUCCESS)

Process: 15113 ExecStartPost=/bin/sh -c if systemctl -q is-active gssproxy; then systemctl reload gssproxy ; fi (code=exited, status=0/SUCCESS)

Process: 15060 ExecStart=/usr/sbin/rpc.nfsd $RPCNFSDARGS (code=exited, status=0/SUCCESS)

Process: 15059 ExecStartPre=/usr/sbin/exportfs -r (code=exited, status=0/SUCCESS)

Main PID: 15060 (code=exited, status=0/SUCCESS)

10月 08 18:55:02 k8s-nfs systemd[1]: Starting NFS server and services...

10月 08 18:55:03 k8s-nfs systemd[1]: Started NFS server and services.

10月 08 19:01:49 k8s-nfs systemd[1]: Reloading NFS server and services.

10月 08 19:01:49 k8s-nfs systemd[1]: Reloaded NFS server and services.

[root@k8s-nfs nfs]#2. 获取NFS配置文件

# 在k8s-node-1节点执行

[root@k8s-node-1 opt]# wget https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner/archive/refs/tags/nfs-subdir-external-provisioner-4.0.18.zip

--2024-11-14 14:22:24-- https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner/archive/refs/tags/nfs-subdir-external-provisioner-4.0.18.zip

正在解析主机 github.com (github.com)... 20.205.243.166

正在连接 github.com (github.com)|20.205.243.166|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 302 Found

位置:https://codeload.github.com/kubernetes-sigs/nfs-subdir-external-provisioner/zip/refs/tags/nfs-subdir-external-provisioner-4.0.18 [跟随至新的 URL]

--2024-11-14 14:22:25-- https://codeload.github.com/kubernetes-sigs/nfs-subdir-external-provisioner/zip/refs/tags/nfs-subdir-external-provisioner-4.0.18

正在解析主机 codeload.github.com (codeload.github.com)... 20.205.243.165

正在连接 codeload.github.com (codeload.github.com)|20.205.243.165|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:未指定 [application/zip]

正在保存至: “nfs-subdir-external-provisioner-4.0.18.zip”

[ <=> ] 8,978,535 330KB/s 用时 31s

2024-11-14 14:22:56 (286 KB/s) - “nfs-subdir-external-provisioner-4.0.18.zip” 已保存 [8978535]

[root@k8s-node-1 opt]# ls

cni containerd kubekey nfs-subdir-external-provisioner-4.0.18.zip

[root@k8s-node-1 opt]#

[root@k8s-node-1 opt]# unzip nfs-subdir-external-provisioner-4.0.18.zip

...

...

[root@k8s-node-1 opt]# ls

cni nfs-subdir-external-provisioner-4.0.18.zip

containerd nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18

kubekey

[root@k8s-node-1 opt]#

[root@k8s-node-1 opt]# cd nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18/

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# ls

CHANGELOG.md cmd deploy Dockerfile.multiarch LICENSE OWNERS_ALIASES release-tools

charts code-of-conduct.md docker go.mod Makefile README.md SECURITY_CONTACTS

cloudbuild.yaml CONTRIBUTING.md Dockerfile go.sum OWNERS RELEASE.md vendor

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]#3. 创建NameSpace

# 在k8s-node-1节点执行

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# kubectl get ns

NAME STATUS AGE

default Active 30m

kube-node-lease Active 30m

kube-public Active 30m

kube-system Active 30m

kubekey-system Active 29m

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# kubectl create ns nfs-system

namespace/nfs-system created

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# kubectl get ns

NAME STATUS AGE

default Active 30m

kube-node-lease Active 30m

kube-public Active 30m

kube-system Active 30m

kubekey-system Active 29m

nfs-system Active 3s

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# sed -i'' "s/namespace:.*/namespace: nfs-system/g" ./deploy/rbac.yaml ./deploy/deployment.yaml

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]#4. 配置 RBAC authorization

# 在k8s-node-1节点执行

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# kubectl create -f deploy/rbac.yamlserviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# 5. 配置 NFS subdir external provisioner

# 在k8s-node-1节点执行

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# vi deploy/deployment.yaml

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]#修改后的内容如下:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/kubesphereio/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.19.124

- name: NFS_PATH

value: /opt/nfs/rw

volumes:

- name: nfs-client-root

nfs:

server: 192.168.19.124

path: /opt/nfs/rw配置完成后, 创建NFS subdir external provisioner

# 在k8s-node-1节点执行

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# kubectl apply -f deploy/deployment.yaml

deployment.apps/nfs-client-provisioner created

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]#查看 deployment、pod 部署结果

# 在k8s-node-1节点执行

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# kubectl get deployment,pods -n nfs-system

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nfs-client-provisioner 1/1 1 1 41s

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-6c64c8cc45-t9kfd 1/1 Running 0 41s

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]#6. 部署 Storage Class

修改storage class配置

# 在k8s-node-1节点执行

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# vi deploy/class.yamlstorageclass.storage.k8s.io/nfs-client created

# 修改后的内容如下:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

```shell

# 在k8s-node-1节点执行

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# kubectl apply -f deploy/class.yamlstorageclass.storage.k8s.io/nfs-client created

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]#查看 Storage Class

# 在k8s-node-1节点执行

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 57s

[root@k8s-node-1 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18]#四. 部署 KubeSphere(4.1.2版本)

0. 安装核心组件 KubeSphere Core

通过 helm 安装 KubeSphere 的核心组件 KubeSphere Core

KubeKey 部署 Kubernetes 集群时会自动安装 Helm,无需手动安装

# 在k8s-node-1节点执行

[root@k8s-node-1 opt]# helm upgrade --install -n kubesphere-system --create-namespace ks-core https://charts.kubesphere.io/main/ks-core-1.1.3.tgz --debug --wait --set global.imageRegistry=swr.cn-southwest-2.myhuaweicloud.com/ks --set extension.imageRegistry=swr.cn-southwest-2.myhuaweicloud.com/ks --set hostClusterName=host若因网络问题不能从目标仓库创建应用, 可以下载后拷贝到本地再创建:

[root@k8s-node-1 opt]# ls -lhra

总用量 8.7M

drwxr-xr-x. 9 root root 4.0K 3月 14 2023 nfs-subdir-external-provisioner-nfs-subdir-external-provisioner-4.0.18

-rw-r--r--. 1 root root 8.6M 11月 14 14:22 nfs-subdir-external-provisioner-4.0.18.zip

drwxr-xr-x. 3 root root 98 11月 14 16:50 kubekey

-rw-r--r--. 1 root root 81K 11月 16 20:14 ks-core-1.1.3.tgz

drwx--x--x. 4 root root 28 11月 14 13:49 containerd

drwxr-xr-x. 3 kube root 17 11月 14 13:48 cni

dr-xr-xr-x. 17 root root 224 11月 13 23:32 ..

drwxr-xr-x. 6 root root 203 11月 16 20:14 .

[root@k8s-node-1 opt]#

[root@k8s-node-1 opt]# helm upgrade --install -n kubesphere-system --create-namespace ks-core /opt/ks-core-1.1.3.tgz --debug --wait --set global.imageRegistry=swr.cn-southwest-2.myhuaweicloud.com/ks --set extension.imageRegistry=swr.cn-southwest-2.myhuaweicloud.com/ks --set hostClusterName=my-k8s-cluster如果镜像提前下载到本地,基本上能实现 KubeSphere Core 的秒级部署

# 可以预先下载的镜像

# ks-core

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/ks-apiserver:v4.1.2

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/ks-console:v4.1.2

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/ks-controller-manager:v4.1.2

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/kubectl:v1.27.16

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/redis:7.2.4-alpine

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/haproxy:2.9.6-alpine

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/ks-extensions-museum:v1.1.2

# grafana

swr.cn-southwest-2.myhuaweicloud.com/ks/curlimages/curl:7.85.0

swr.cn-southwest-2.myhuaweicloud.com/ks/grafana/grafana:10.4.1

swr.cn-southwest-2.myhuaweicloud.com/ks/library/busybox:1.31.1

# opensearch

swr.cn-southwest-2.myhuaweicloud.com/ks/opensearchproject/opensearch:2.8.0

swr.cn-southwest-2.myhuaweicloud.com/ks/library/busybox:1.35.0

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/opensearch-curator:v0.0.5

swr.cn-southwest-2.myhuaweicloud.com/ks/opensearchproject/opensearch-dashboards:2.8.0

# whizard-logging

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/kubectl:v1.27.12

swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/log-sidecar-injector:v1.3.0

swr.cn-southwest-2.myhuaweicloud.com/ks/jimmidyson/configmap-reload:v0.9.0

swr.cn-southwest-2.myhuaweicloud.com/ks/elastic/filebeat:6.7.0

swr.cn-southwest-2.myhuaweicloud.com/ks/timberio/vector:0.39.0-debian

swr.cn-southwest-2.myhuaweicloud.com/ks/library/alpine:3.14安装完成后, 控制台展示信息如下:

NOTES:

Thank you for choosing KubeSphere Helm Chart.

Please be patient and wait for several seconds for the KubeSphere deployment to complete.

1. Wait for Deployment Completion

Confirm that all KubeSphere components are running by executing the following command:

kubectl get pods -n kubesphere-system

2. Access the KubeSphere Console

Once the deployment is complete, you can access the KubeSphere console using the following URL:

http://192.168.19.121:30880

3. Login to KubeSphere Console

Use the following credentials to log in:

Account: admin

Password: P@88w0rd

NOTE: It is highly recommended to change the default password immediately after the first login.

For additional information and details, please visit https://kubesphere.io.1. 验证 KubeSphere Core 状态

# 在k8s-node-1节点执行

[root@k8s-node-1 opt]# kubectl get pods -n kubesphere-system

NAME READY STATUS RESTARTS AGE

extensions-museum-558f99bcd4-4jnwj 1/1 Running 0 2m3s

ks-apiserver-57d575b886-4kgwx 1/1 Running 0 2m3s

ks-console-b9ff8948c-5c42w 1/1 Running 0 2m3s

ks-controller-manager-8c4c4c68f-ww8f9 1/1 Running 0 2m3s

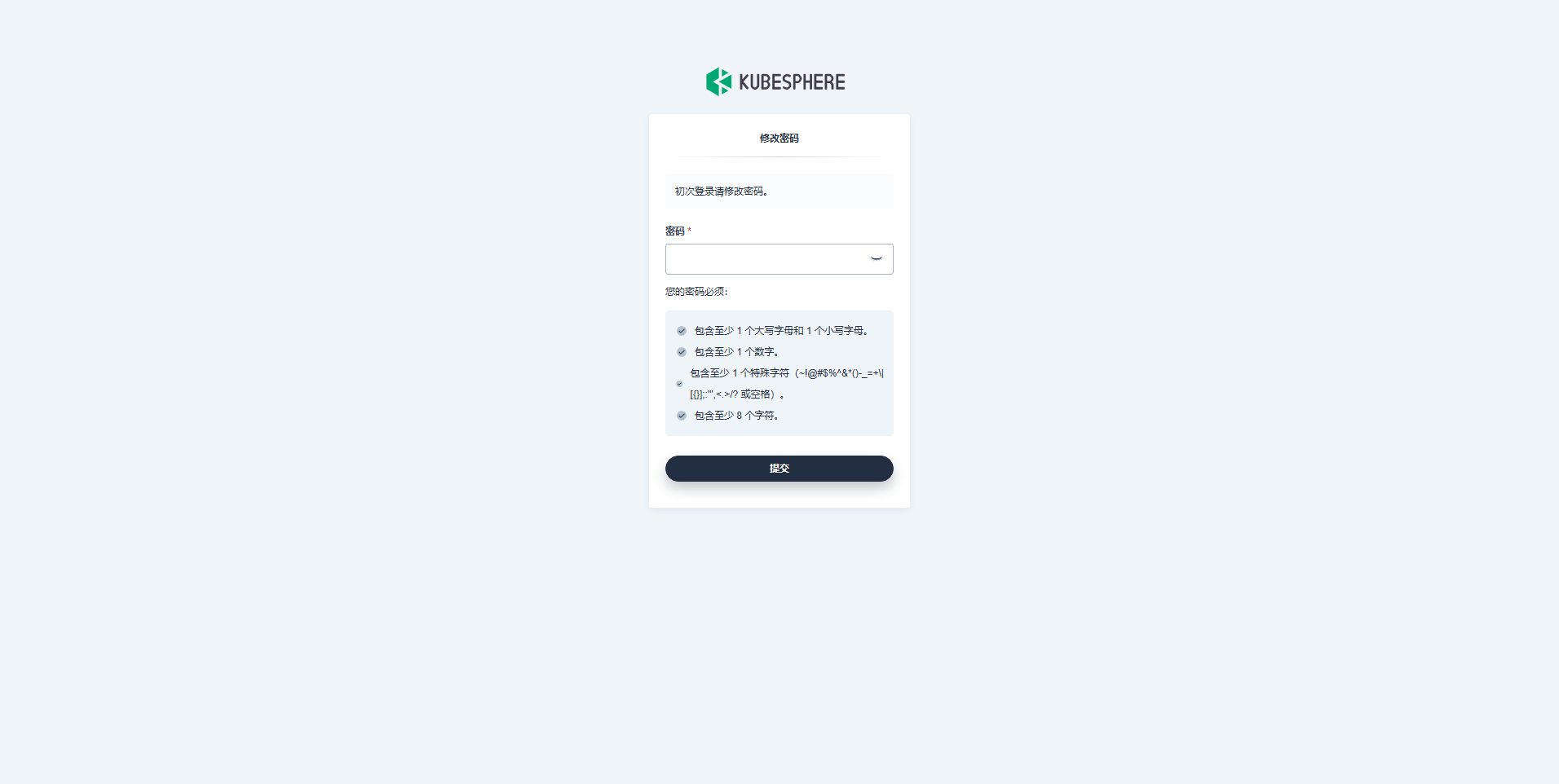

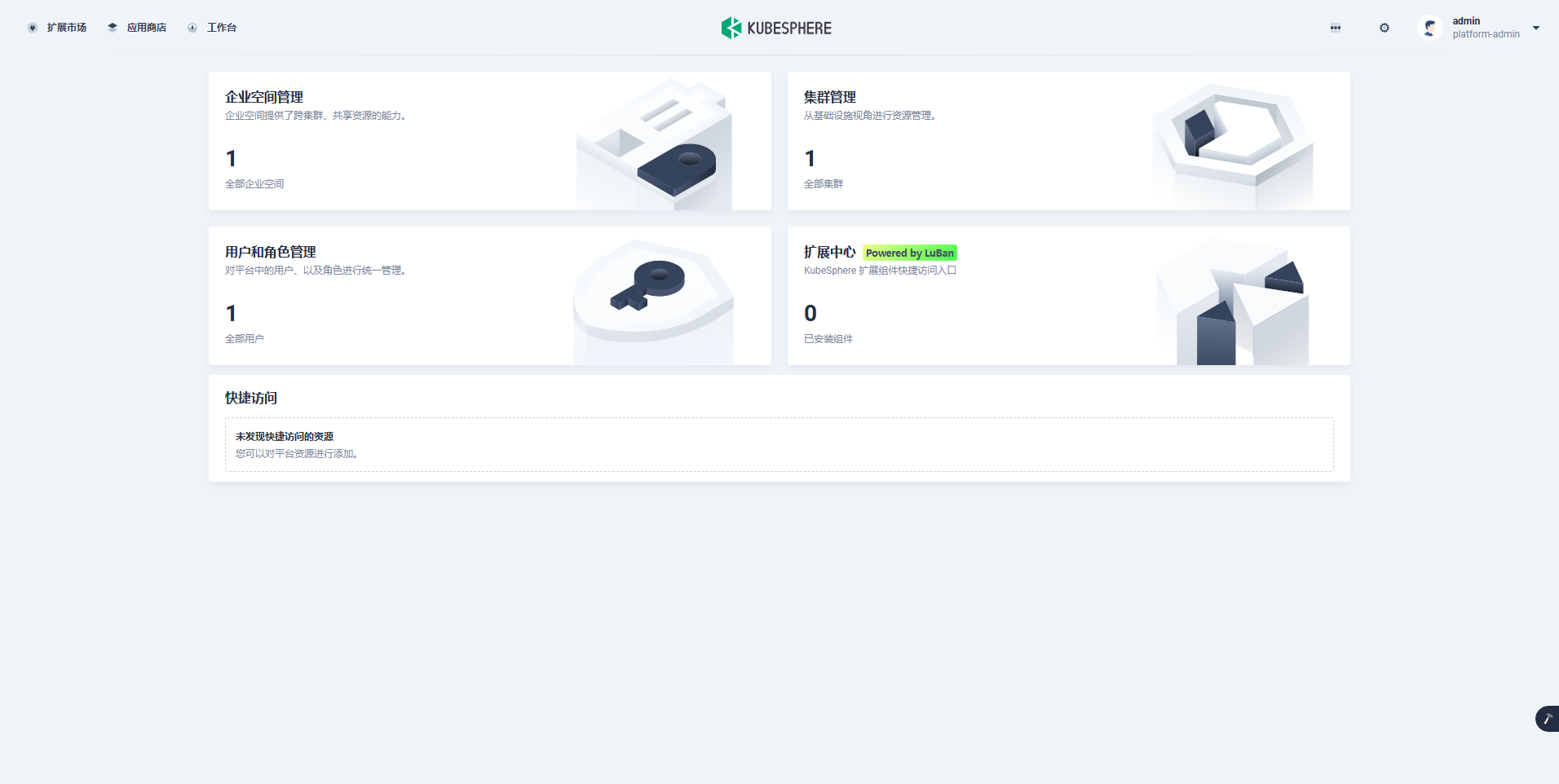

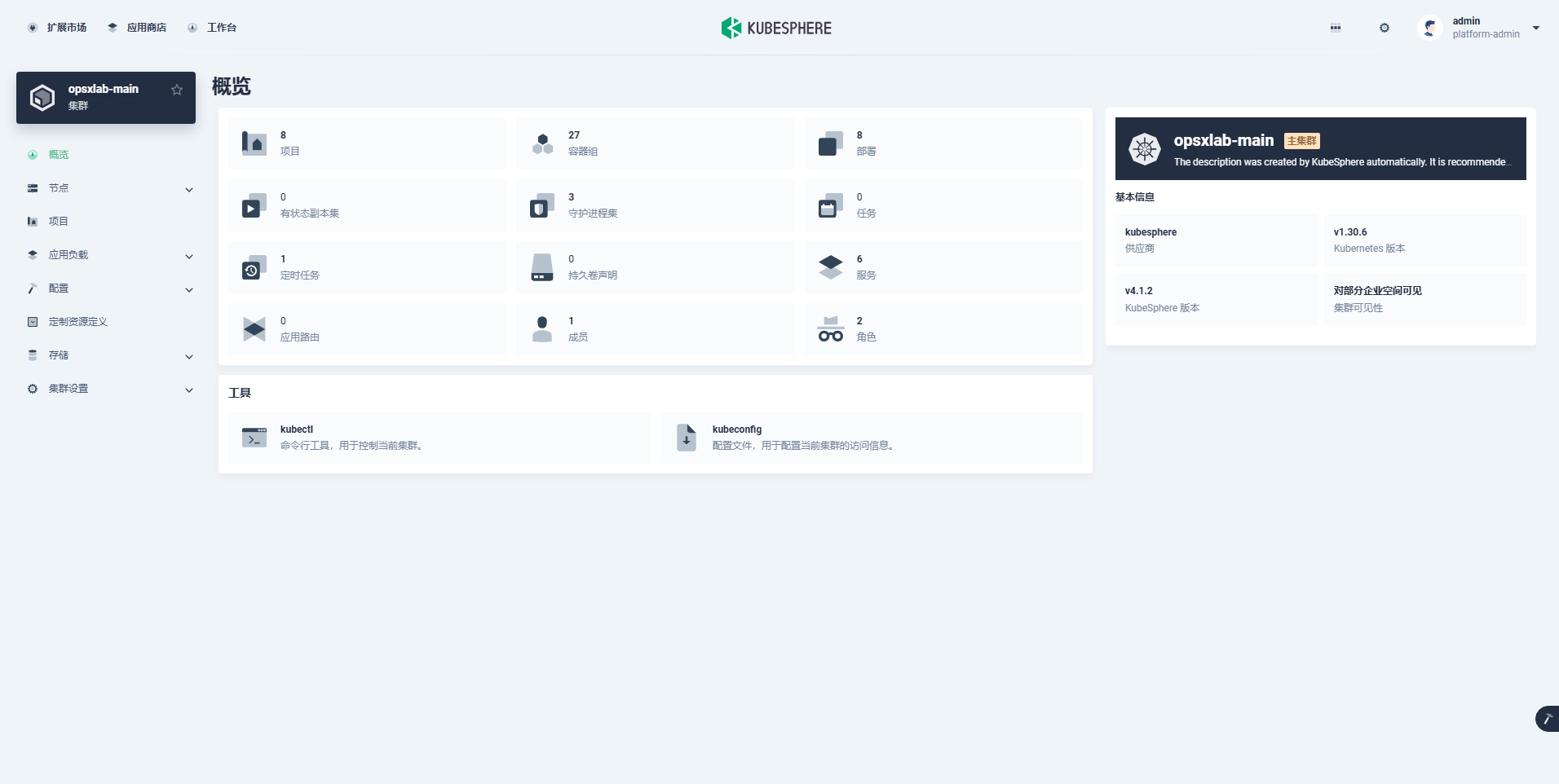

[root@k8s-node-1 opt]#2. 使用KubeSphere控制台

控制台信息

登录地址: http://192.168.19.121:30880

账号: admin 密码: P@88w0rd

修改密码

跳转到控制台页面

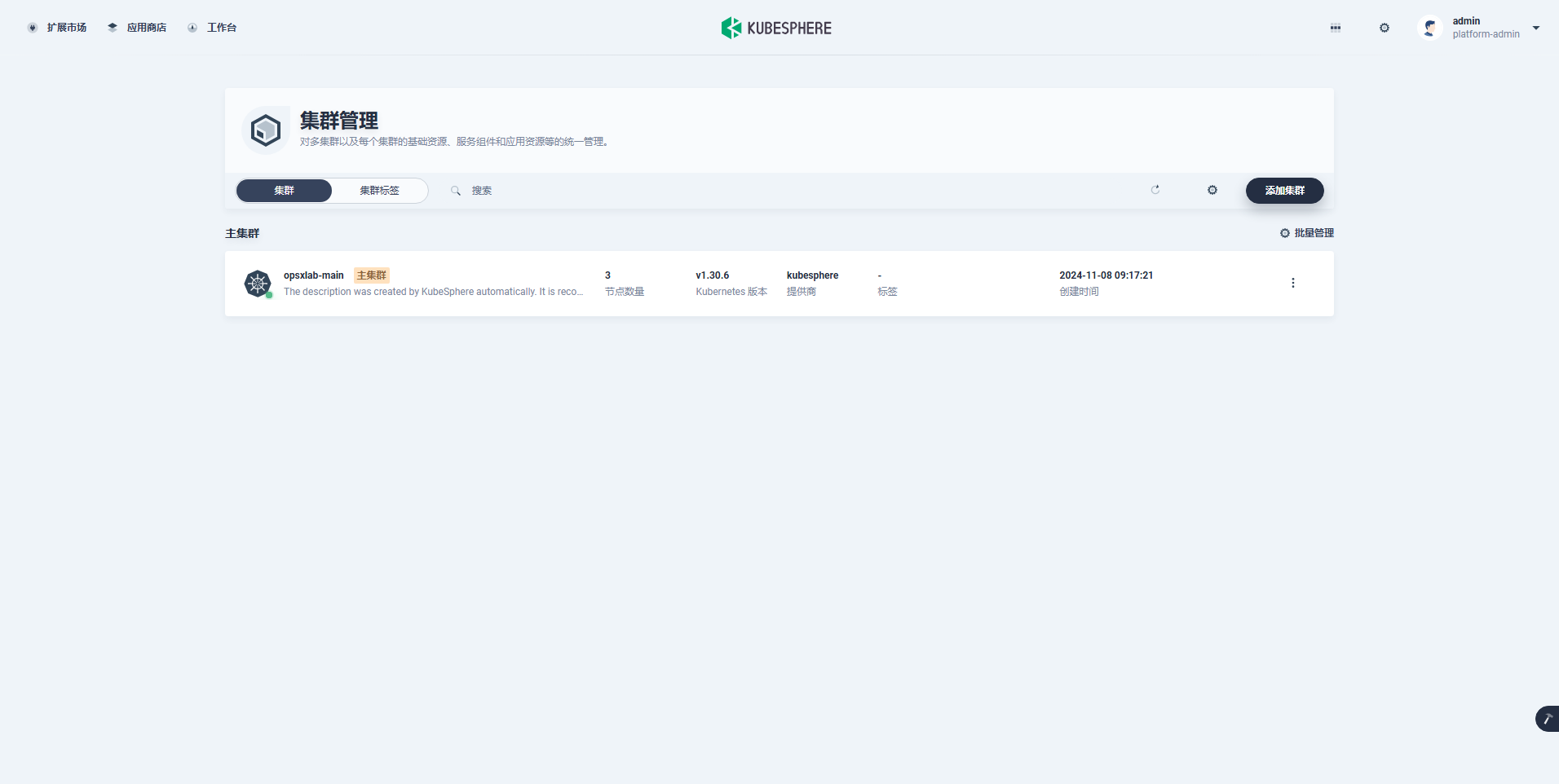

在工作台页面点击集群管理, 查看集群列表

点击集群, 进入集群管理页面

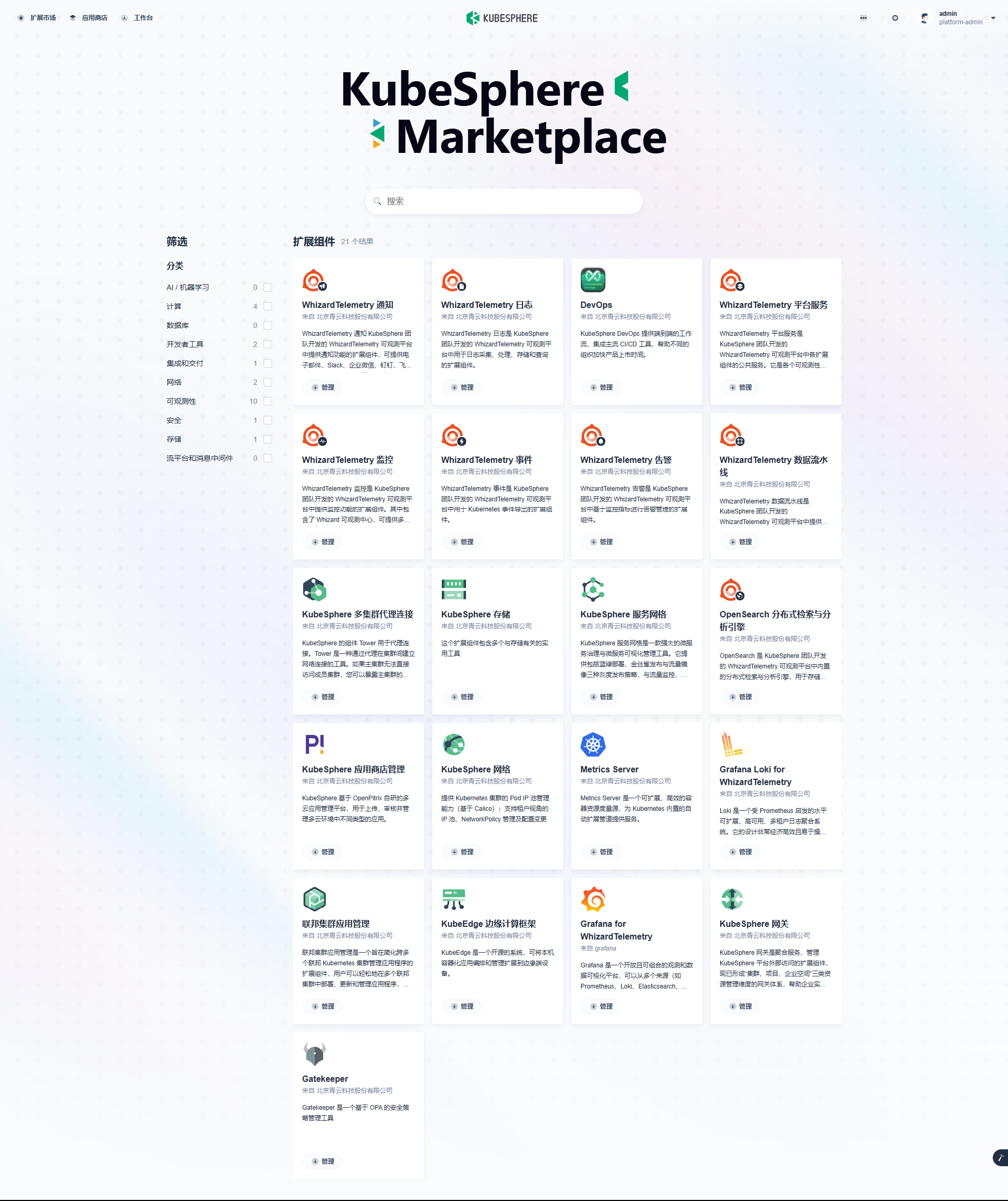

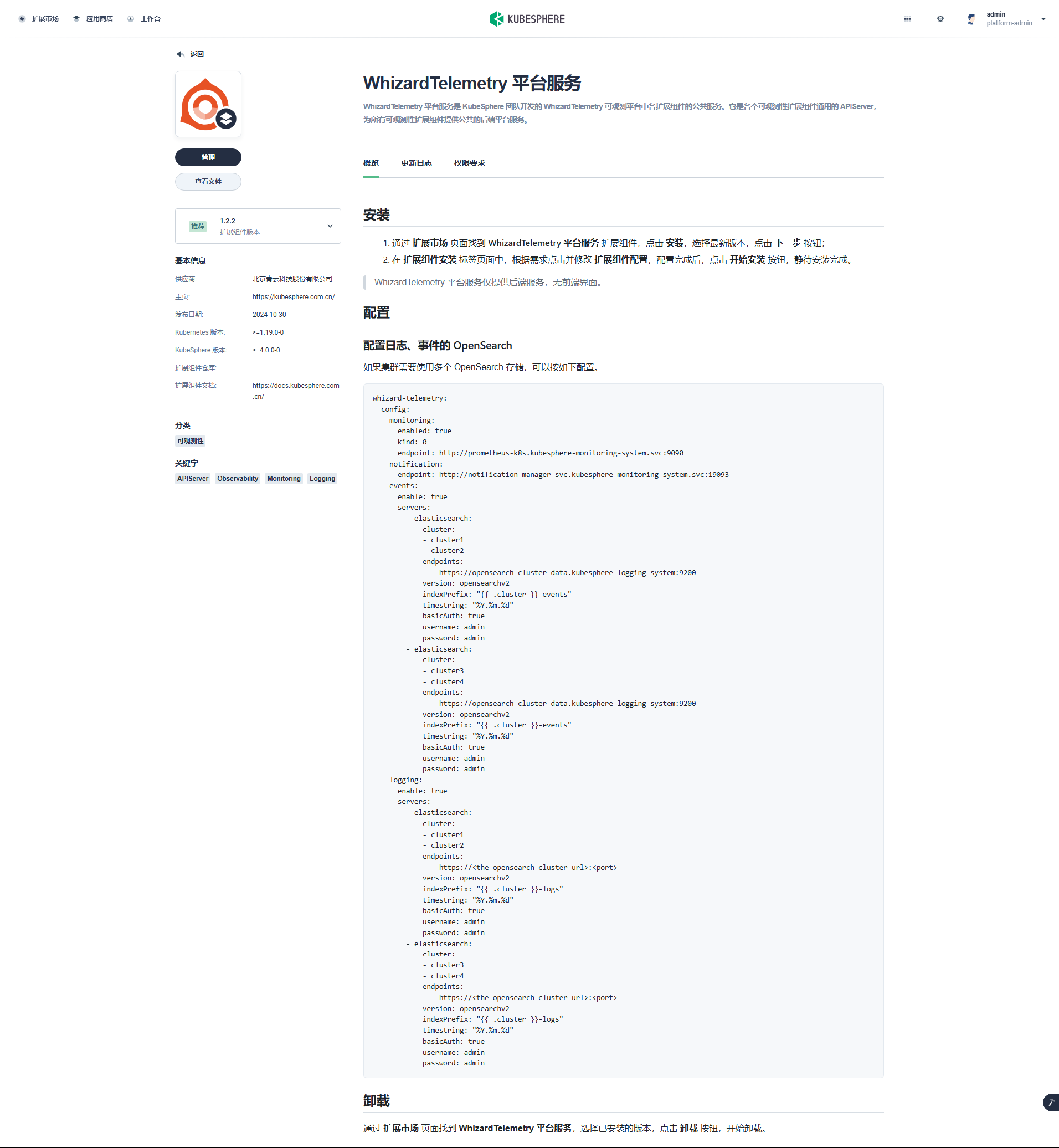

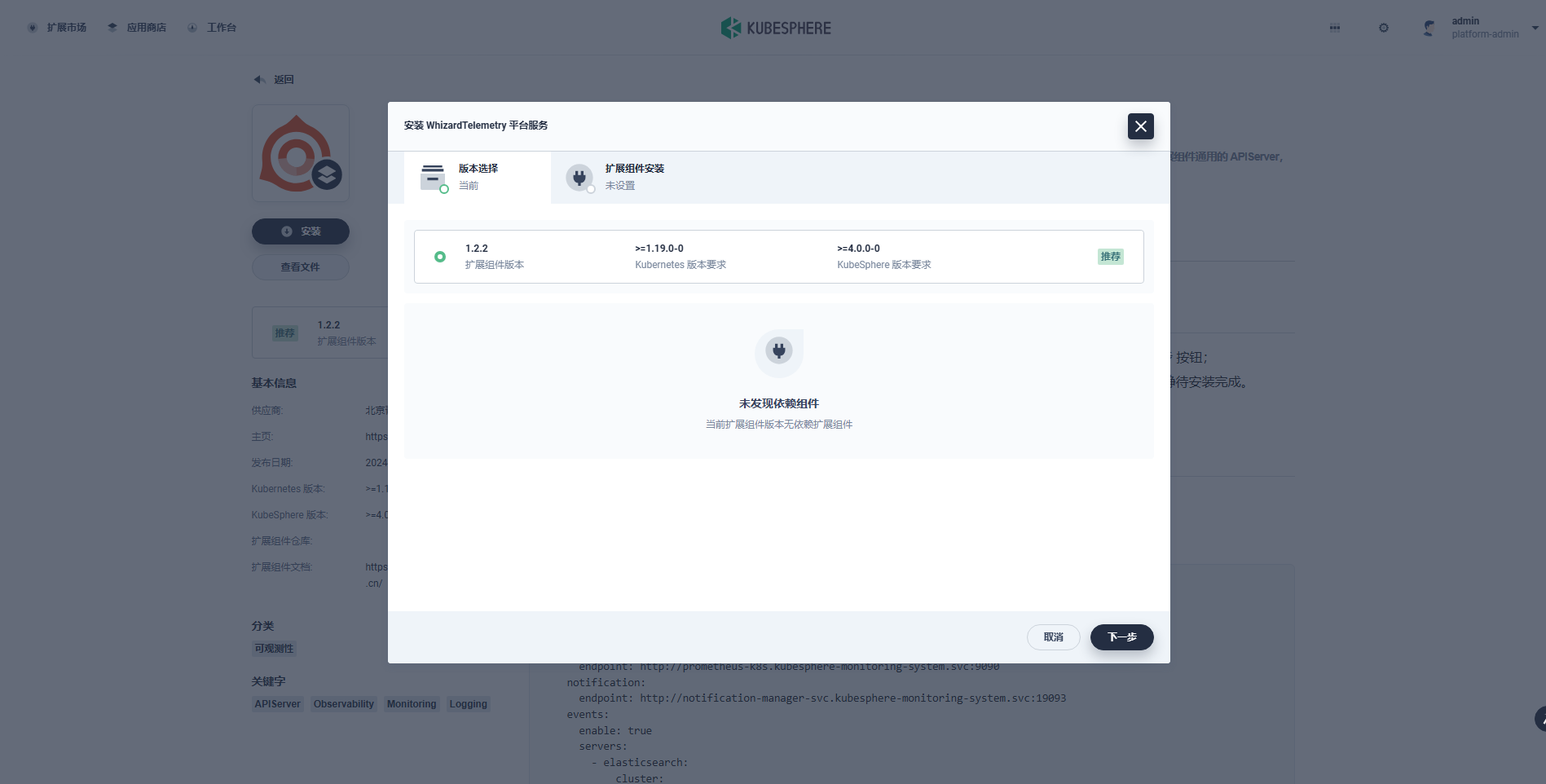

3. 安装扩展组件

进入扩展市场, 可以安装、管理插件市场已有的插件。Kubesphere 4.1.2 版本默认自带 21 个 插件

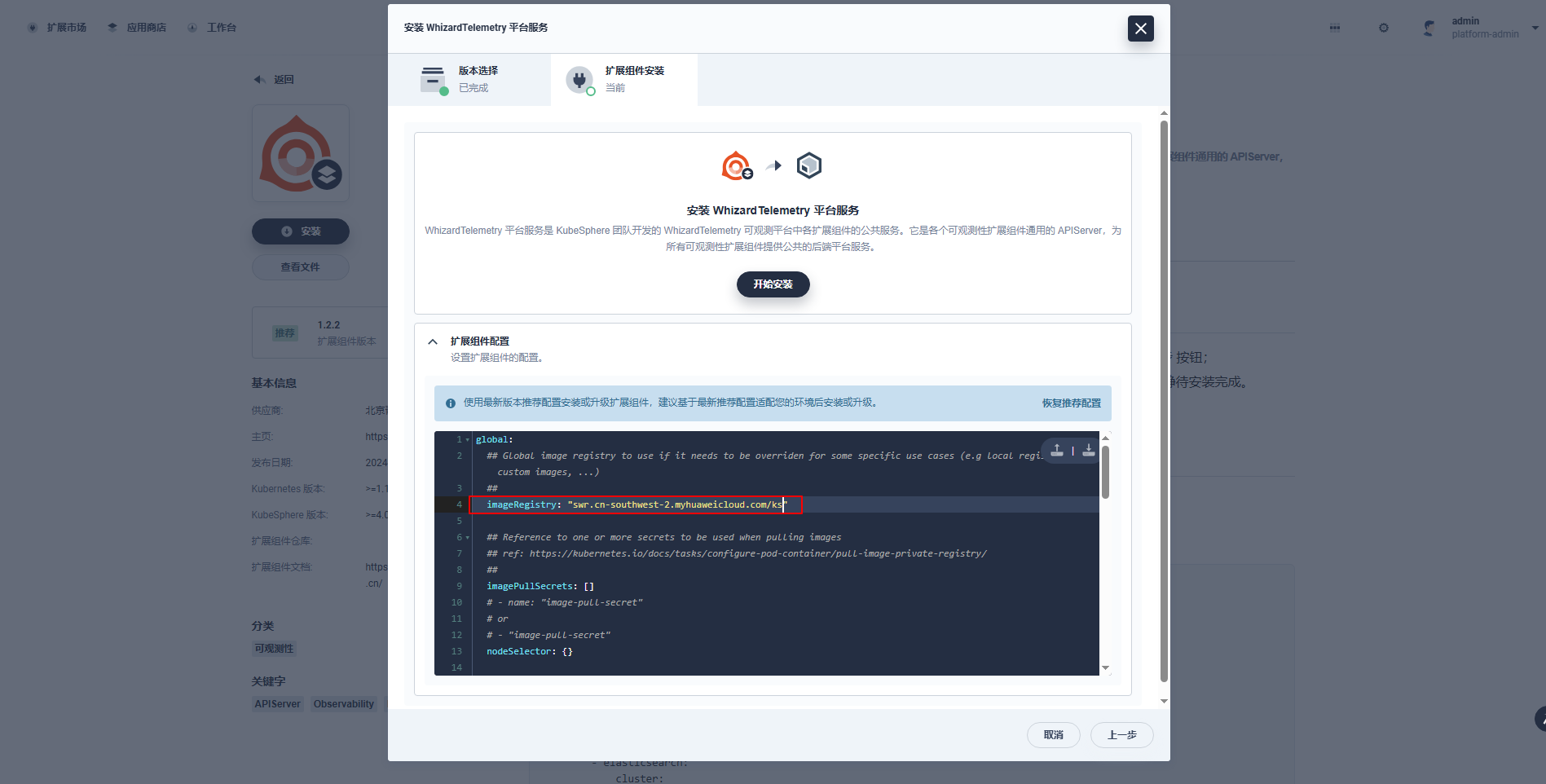

选择安装 [WhizardTelemetry 平台服务] 组件和 [WhizardTelemetry 监控]组件

扩展组件安装: 镜像仓库默认使用国外镜像,为了避免部署失败,在扩展组件配置页,修改 imageRegistry 为 KubeSphere 官方提供的华为云镜像仓库地址: swr.cn-southwest-2.myhuaweicloud.com/ks

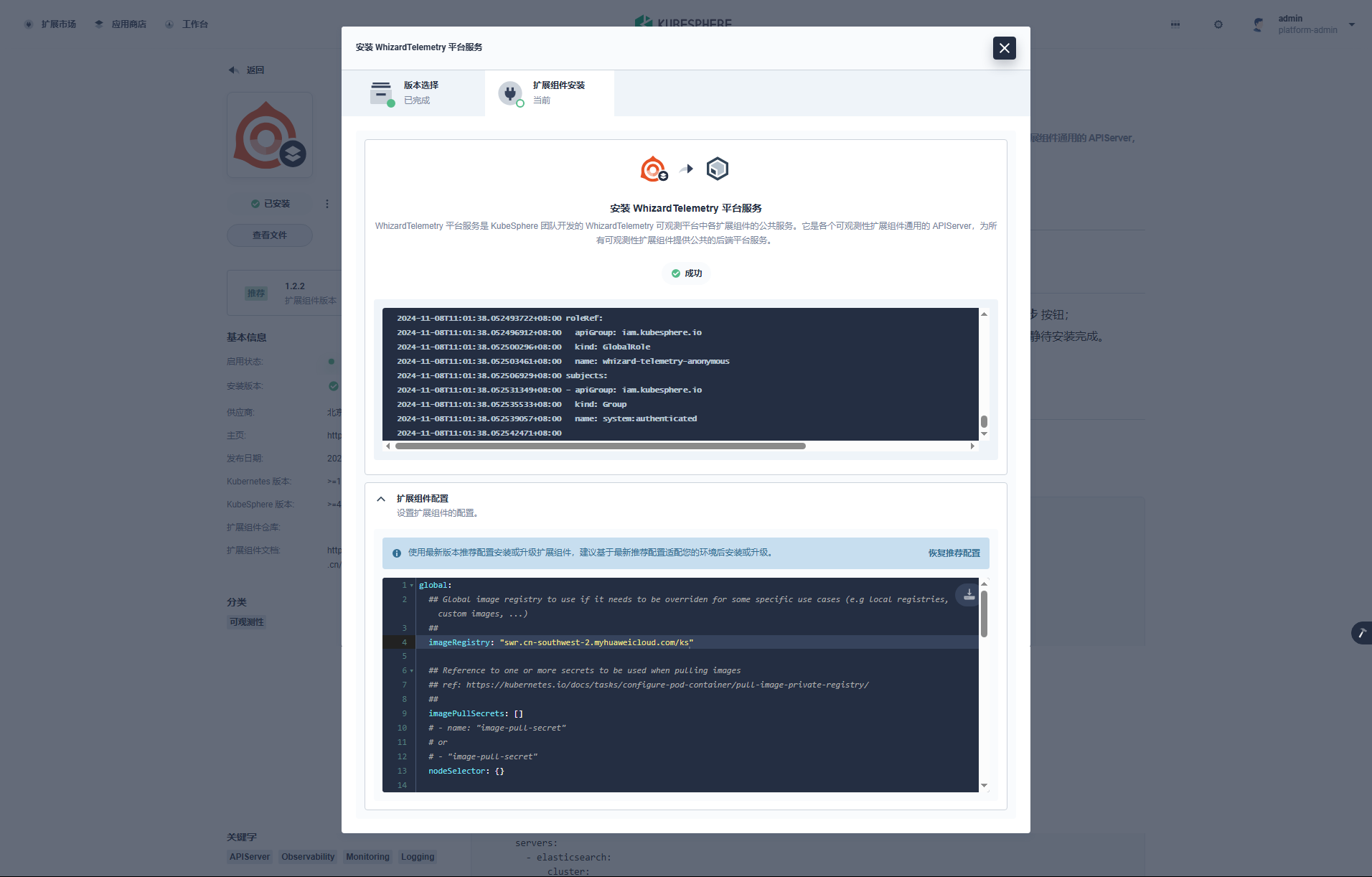

点击安装, 安装过程可视化, 安装完成后默认启用组件

4. 插件验证

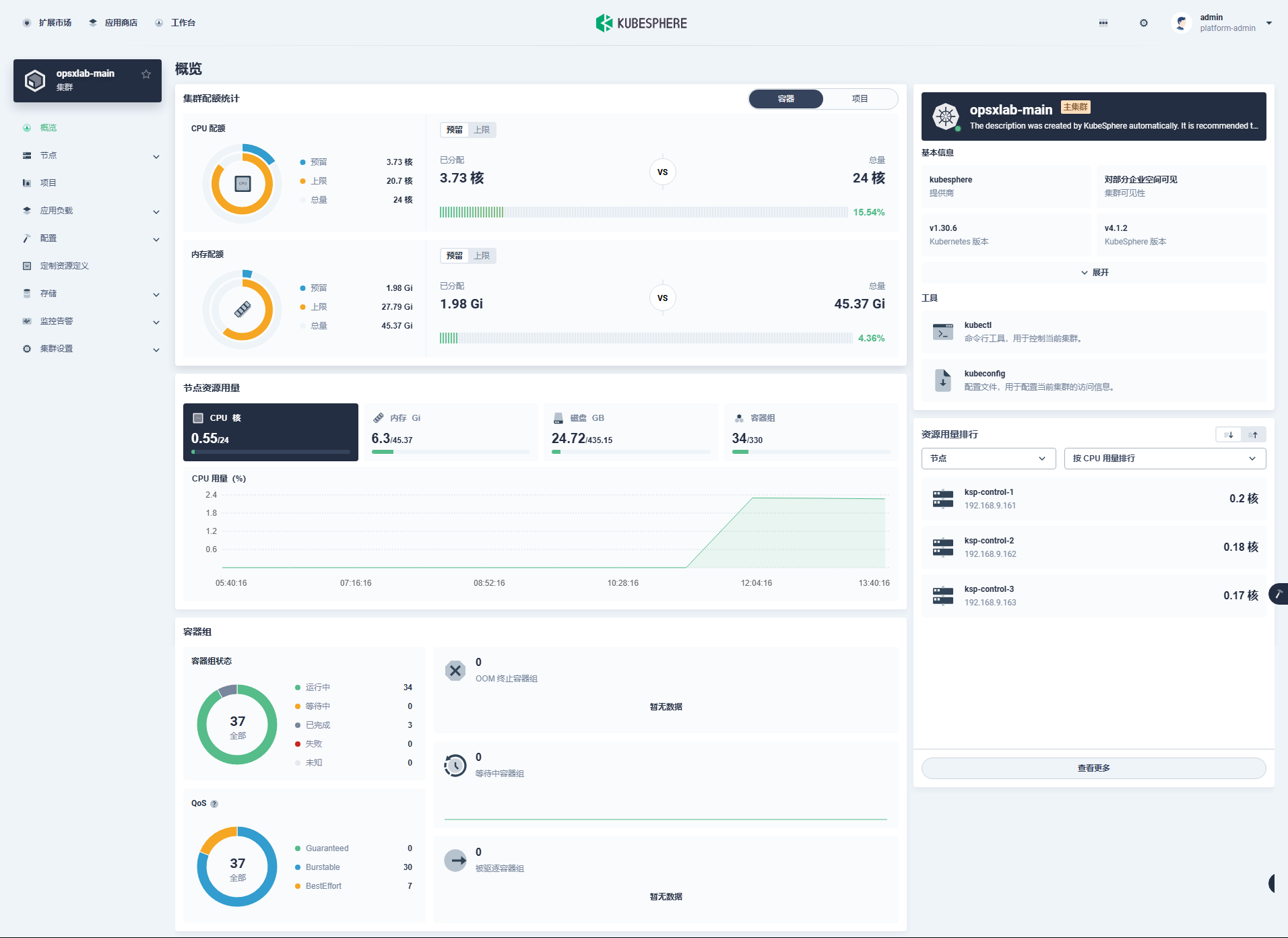

安装完平台组件和监控组件后, 回到集群管理页面刷新, 概览页信息更全面, 左侧菜单页有监控相关内容

5. 配置应用商店

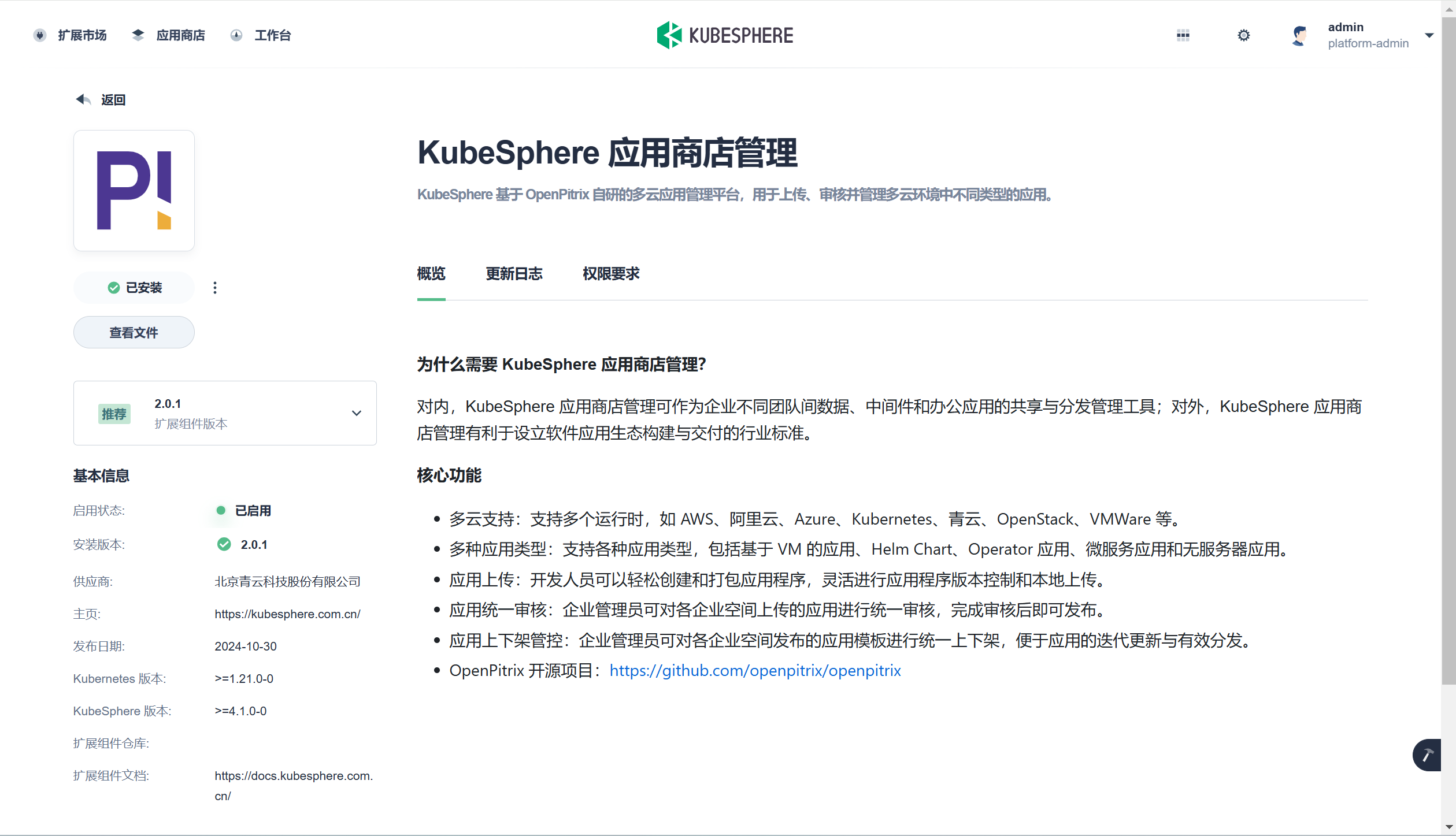

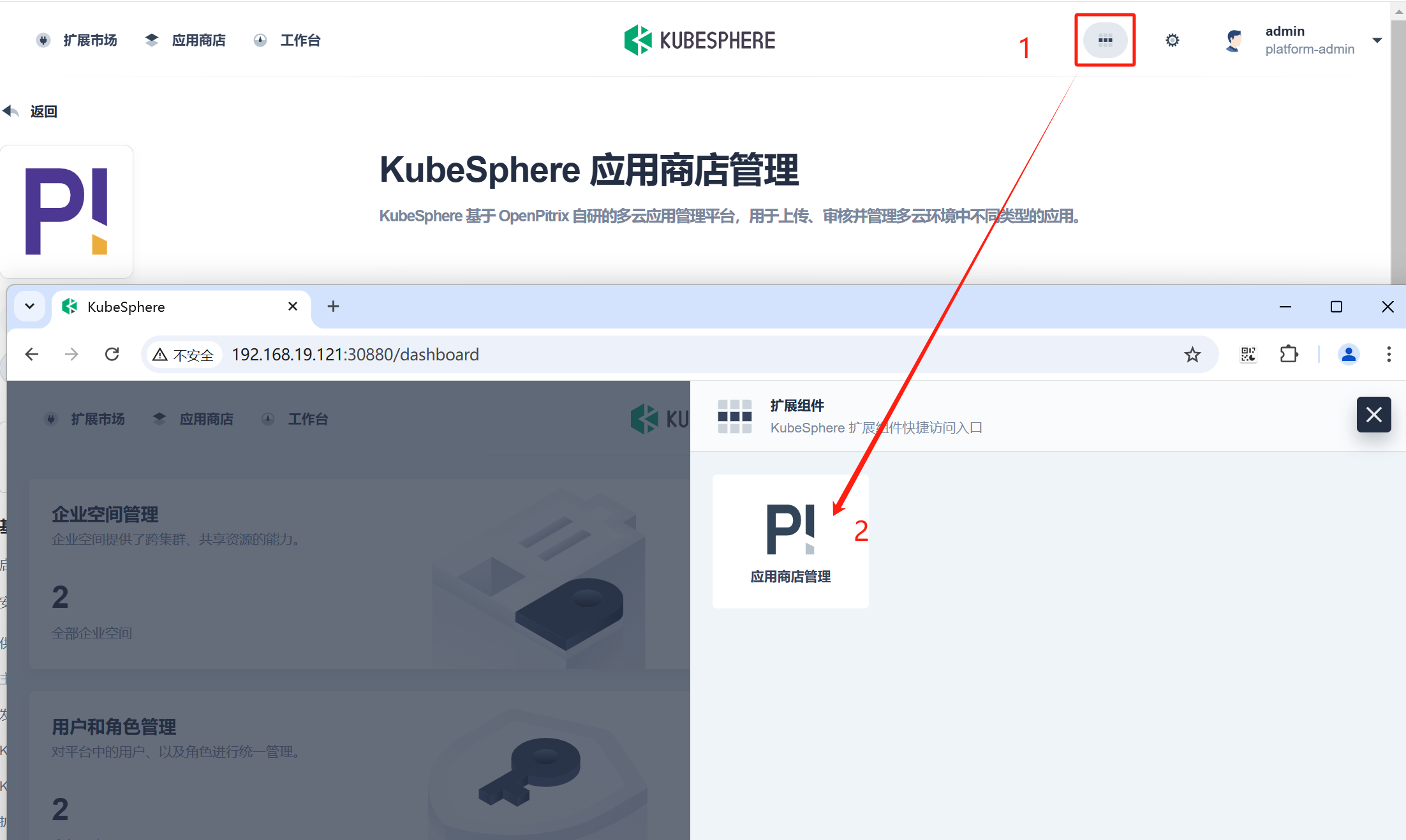

先进入扩展市场安装组件

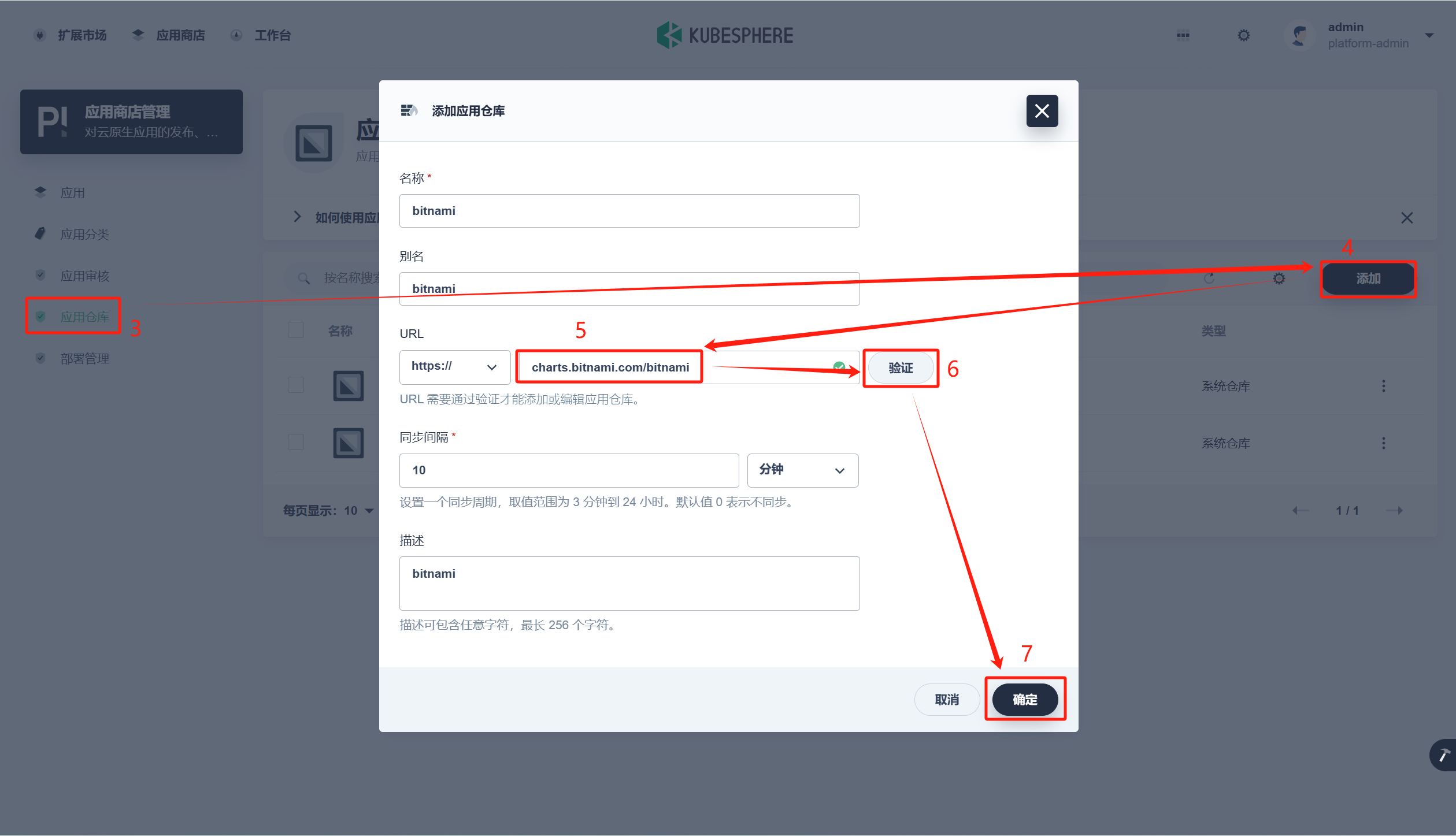

安装完成进入应用商店管理

添加常用helm仓库